How to communicate the reality of AI-assisted engineering in today’s hype cycle

Show real use cases, outline the cost of enablement, and tell a story with data.

Welcome to the latest issue of Engineering Enablement, a weekly newsletter sharing research and perspectives on developer productivity.

How Uber uses AI to analyze developer feedback — On June 25, I’m hosting a live session with Abhishek Tibrewal from Uber on how they’re using AI to analyze developer feedback and uncover hotspots. We’ll see a live demo and discuss how it’s reshaped the way their Platform team sets priorities and engages leadership. Sign up here.

This week, DX CTO Laura Tacho is sharing guidance for having better conversations with execs about the impact of AI.

A lot of engineering leaders are feeling the pressure: execs have been sold on massive productivity gains, and so many have inflated expectations. It’s on engineering leaders to ground these conversations in reality, by focusing on what the tools are actually being used for, the impact they’re having so far, and what it’ll take to enable teams to get more out of AI.

Here’s Laura with a practical guide to help.

Let’s face it – there’s a lot of hype about AI floating around, and lots of overblown promises about immediate 10x productivity across the organization. Just scrolling through LinkedIn while drinking your morning coffee will put you face-to-face with claims of AI fully replacing junior engineers, security teams, code reviews, and allowing you to ship something in 20 minutes that might have taken 20 weeks.

For non-technical executives and stakeholders, it can be difficult to distinguish between an authentic claim and something that’s overstated, simply because they don’t have background knowledge from working with the tools and processes that AI claims to replace or greatly accelerate.

As an engineering leader, you have an important role to play when it comes to communicating the realistic value of AI to your business stakeholders. It’s your job to educate your partners on the gap between hyped promises and practical implementation by educating them on real use cases for AI in your organization, helping them understand the amount of support and enablement necessary to see real ROI, and finally sharing data on the value of AI in ways that matter to them.

Discuss real use cases by focusing on the problem first, and the tool second

Tools need to solve real problems for your business, and AI tools are no different. It’s easy for anyone to be drawn into product promises coming from AI tools. This can often come back to you as an engineering leader in the form of this question: “Are we falling behind on this? Microsoft is writing 30% of their code with AI.”

While executives and senior leaders need to have a reasonable understanding of software development methodologies, they won’t be experts in every tool and process, which means they lack the background knowledge to understand why something may or may not work in your organizational context. Instead of reacting to a question like this with dismissal, recognize that it’s the job of an engineering leader to educate non-engineering stakeholders on engineering tools and processes (to a reasonable degree). In this case, you’ll want to share more about the approach and evaluation criteria for purchasing and adopting a tool.

Rule #1: Tools must solve problems. Share more about the strategy of adopting a tool, starting with the most important bit: tools need to solve concrete problems for our businesses. Having a clear understanding of the biggest points of friction in your software development processes can keep the focus of this conversation on the things you know are impacting your business right now, instead of being distracted by a bold promise of something that’s not on the critical path.

Reassure there are existing avenues for discovery and experimentation. Being pragmatic about tool adoption doesn’t mean there isn’t a time and place for experimentation, exploration, and rapid prototyping. Many executives fear that falling behind on AI adoption will mean that the business gets left behind in the dust. Reassure your partners that you are staying on top of this rapidly evolving area and are continually seeking novel ways in which AI can be a multiplier for your business.

Outline the real cost of enablement and support needed for tooling success

Adopting tools is resource-intensive. For some AI tools, the productivity benefits are clear (more about measuring them later), and quickly outweigh the cost of investment. But there’s no free lunch here. It’s not as simple as giving developers access to the tool; there needs to be an adequate amount of support around the rollout in order for it to be adopted and used effectively, and this is especially true in larger organizations with more complexity.

Developers need training and support. Despite the genuine excitement surrounding these tools, the path to widespread developer adoption and effective usage remains challenging. Our research consistently reveals a fundamental barrier: AI-assisted coding requires new techniques that many developers haven't yet mastered. Without clear guidance and established practices, developers struggle to integrate these tools efficiently into their daily workflows, particularly when facing tight deadlines. DX’s Guide to AI-Assisted Engineering will help your organization close this gap, providing concrete strategies for executives, managers, and developers to increase the value from AI tools.

Executive support is a make-or-break factor for widespread AI adoption. Successful deployment of AI code assistants demands leadership commitment from the top. In DORA’s recent report on AI in software development, they found that organizations had 451% higher adoption when there was a defined acceptable use policy.

Having transparent conversations about the role of AI is also important. You can reduce fear and distrust about AI tooling by explaining that these tools are meant to augment and accelerate your teams, not replace them. Remind engineers that this is an opportunity to learn about techniques that are likely to benefit them for the remainder of their careers.

Communicate the value of AI in a way that matters to the business

We agreed a long time ago that lines of code don’t equal productivity, but a lot of us are still trying to communicate the impact of GenAI tooling to our non-technical stakeholders by solely using numbers around code suggestions, acceptances, and % of lines of code written by AI. Utilization metrics like these are important, but they don’t tell the full story. Instead of focusing solely on output, you need to clearly articulate the benefits this paradigm shift is bringing to your organization.

Take a multi-dimensional approach to measurement. Measuring the productivity of your engineering organization has always been complex, and introducing AI doesn’t change the fundamental concepts. At the same time, you need to focus on specific measurements to see how the tool is being used, so you can see what’s working and adapt when something is going sideways. Using a proven framework for measuring productivity like the DX Core 4 can help streamline conversations around AI impact by aligning stakeholders with a common definition of productivity, and which metrics matter most.

Then, add in metrics about AI’s utilization (# of active users), impact (time saved per developer), and cost (AI spend per developer). Only when you marry these AI-focused metrics with existing measures of engineering performance can you get a complete picture of AI’s impact on your org.

Keep an eye out for more research-backed guidance on measuring AI’s impact from Abi and me in the next couple of weeks.

Make the metrics matter to your audience. Even with a solid framework like the DX Core 4, you’ll want to supplement with metrics that matter most to your audience.

Executive team: time to market, security posture, capacity for innovation, risk reduction, tooling ROI.

Product leaders: cycle time, product quality, rate of experimentation, capacity for innovation.

Finance team: operational costs, resource allocation, tooling ROI.

Developers: time savings, reducing toil, increased efficiency

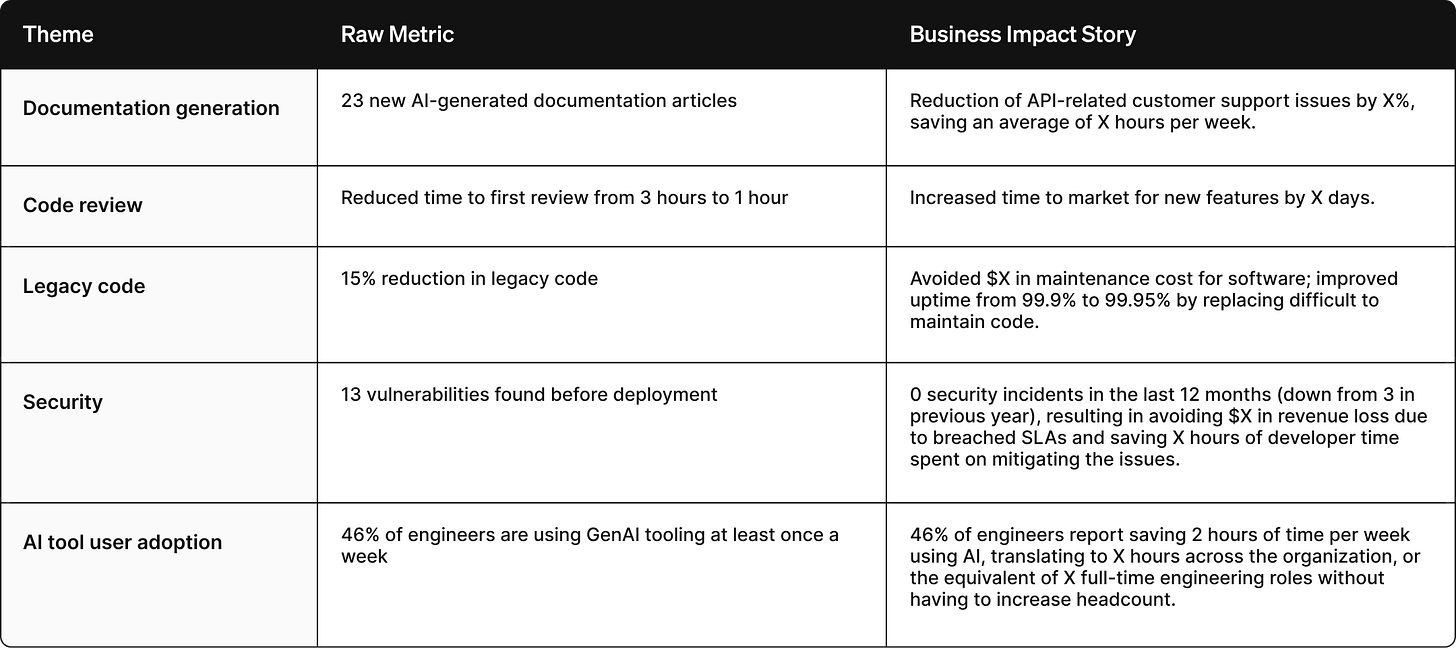

Tell a story with data, don’t just report on the numbers. When presenting metrics to stakeholders, transform raw figures into meaningful narratives that illustrate real business impact. Remember, the focus is on the problem first, tool second, so use numbers to show how the problem is being solved. Below are a few examples to reference.

Share metrics on usage and adoption to strengthen the ROI story. Output metrics like the percentage of code delivered with AI assistance do have their place when reporting on the efficacy of tools, but only with proper context around business impact. As in the example above, go beyond simple output and usage metrics and share the impact on the business—whether that’s time saved, increase in developer experience ratings, or something else.

These metrics are also important for reporting on the success of enablement functions. By knowing which populations are using the tools most effectively and which ones are in need of more support, you can create targeted training campaigns to boost adoption of your tools and learn about points of friction when it’s early enough to intervene.

Use self-reported data for a comprehensive assessment of gains across tools and processes. Many developers use more than one AI tool in their daily work, and for different purposes. This fragmentation across tooling ecosystems often makes gathering perfect usage metrics incredibly difficult. Self-reported data on time savings is incredibly useful for building a complete picture when automated tracking is unavailable, or simply would require too much investment. Using well-designed surveys can reveal productivity patterns that system logs miss entirely.

These reports often uncover unexpected benefits, like reduced context switching or improved focus, that quantitative metrics fail to capture. Triangulate self-reported information with available system data to validate patterns and identify discrepancies. While some engineering leaders dismiss self-reported metrics as unreliable, this approach becomes invaluable when tracking impact across disconnected tools and workflows where comprehensive monitoring is not available. The key is consistent collection and transparent acknowledgment of data limitations while still using these insights to guide your AI implementation strategy.

Your next steps

Get access to metrics

Use the DX Guide to AI Assisted Engineering as part of your enablement campaign to increase proficiency with GenAI tooling across your development organization.

Use GenAI impact reports like the one in DX to better communicate ROI

Strengthen your data storytelling skills to make the metrics matter to your target audience

As an engineering leader, you have the responsibility—and the opportunity—to shape how your organization perceives and adopts AI technologies. By cutting through the hype and providing realistic, balanced assessments, you can help your company make sound investments that deliver genuine value.

The most sustainable approach to AI adoption isn't chasing every shiny new tool or making grandiose claims. It's thoughtfully integrating AI capabilities into your engineering processes in ways that address real challenges and deliver measurable improvements.

By serving as the voice of reason amid the AI hype storm, you'll not only protect your organization from costly disappointments but also position it to capture the very real benefits these technologies can offer when applied with clarity and purpose.

Who’s hiring right now

This week’s featured DevProd & Platform job openings. See more open roles here.

Dropbox is hiring multiple Infrastructure and AI Dev Tools roles | US (Remote)

Atlassian Williams Racing is hiring a Platform Engineer and Software Engineer - Engineering Acceleration | Grove, UK

LEGO is hiring a Lead Engineer - GenAI Tech Enablement | Copenhagen, DK

ScalePad is hiring a Head of AI Engineering & Enablement | Canada (Remote or in-office)

Capital One is hiring a Manager, Product Management - Platform | Plano, TX and McLean, VA

That’s it for this week. Thanks for reading.

-Abi

hi! I haven't read the Guide to AI assisted engineering yet, but it seams to me that in the "Take a multi-dimensional approach to measurement." Paragraph, we are missing some opportunity-costs.

The first is the chance to be siloed in technologies that are "brand new" - what if a supplier suddenly changes its business model? I mean, this field is murky that we cannot find a reasonable substitute out of the blue in no time as we do for commodities.

The second: environmental issues. We should take care of our environment in every aspect of our lives. Period. Or at least be conscious about that :-)

And, finally, what about the fact that we we are delegating too much to AI? I am not a luddist :-) I want AI to be used for meaningful things and at the same time still have the opportunity to exercise my brain.

Otherwise, when, from time to time our beloved AI tools would go down, we wouldn't be able to do nothing, the younger because they never walked alone, the older because they forget:-)