Why developers lose trust in AI tools

Giving developers more control over their experiences with AI can help them build an appropriate level of trust and ultimately improve adoption.

This is the latest issue of Engineering Enablement, a weekly newsletter covering the data behind world-class engineering organizations. To get articles like this in your inbox every Friday, subscribe:

Join us: Laura Tacho and I are hosting a webinar covering new industry benchmarks for measures of speed, effectiveness, quality, and impact. If you’re available on October 31st at 8am PT to join, you can register for the discussion here.

This week I read Understanding and Designing for Trust in AI-Powered Developer Tooling, the latest paper in Google’s Developer Productivity for Humans series. In earlier research, Google’s team explored the reasons why developers do or don’t adopt AI tools, and discovered that trust is a key factor. This paper builds on that line of research by diving deeper into what impacts whether developers trust AI tools.

My summary of the paper

According to a survey from Stack Overflow, nearly one-third of developers distrust AI-generated recommendations. Google’s research team wanted to better understand the causes of this distrust, and provide recommendations for building or implementing AI tools that developers find reliable and worth using.

The researchers conducted a mixed-methods study, starting with using the company’s EngSat survey to ask developers about how much they trust the output of AI tools, and how these tools impact their overall experience. They received over 1,000 comments, which were then categorized into themes and general sentiments (positive, neutral, or negative).

The team then complemented the survey data with in-depth interviews to capture more nuanced insights about developers' experiences. They categorized the findings by grouping developers based on their self-reported levels of trust and productivity. These insights were then translated into design recommendations aimed at addressing the trust barriers that emerged from the data.

Here’s what they learned.

Causes of distrust among developers

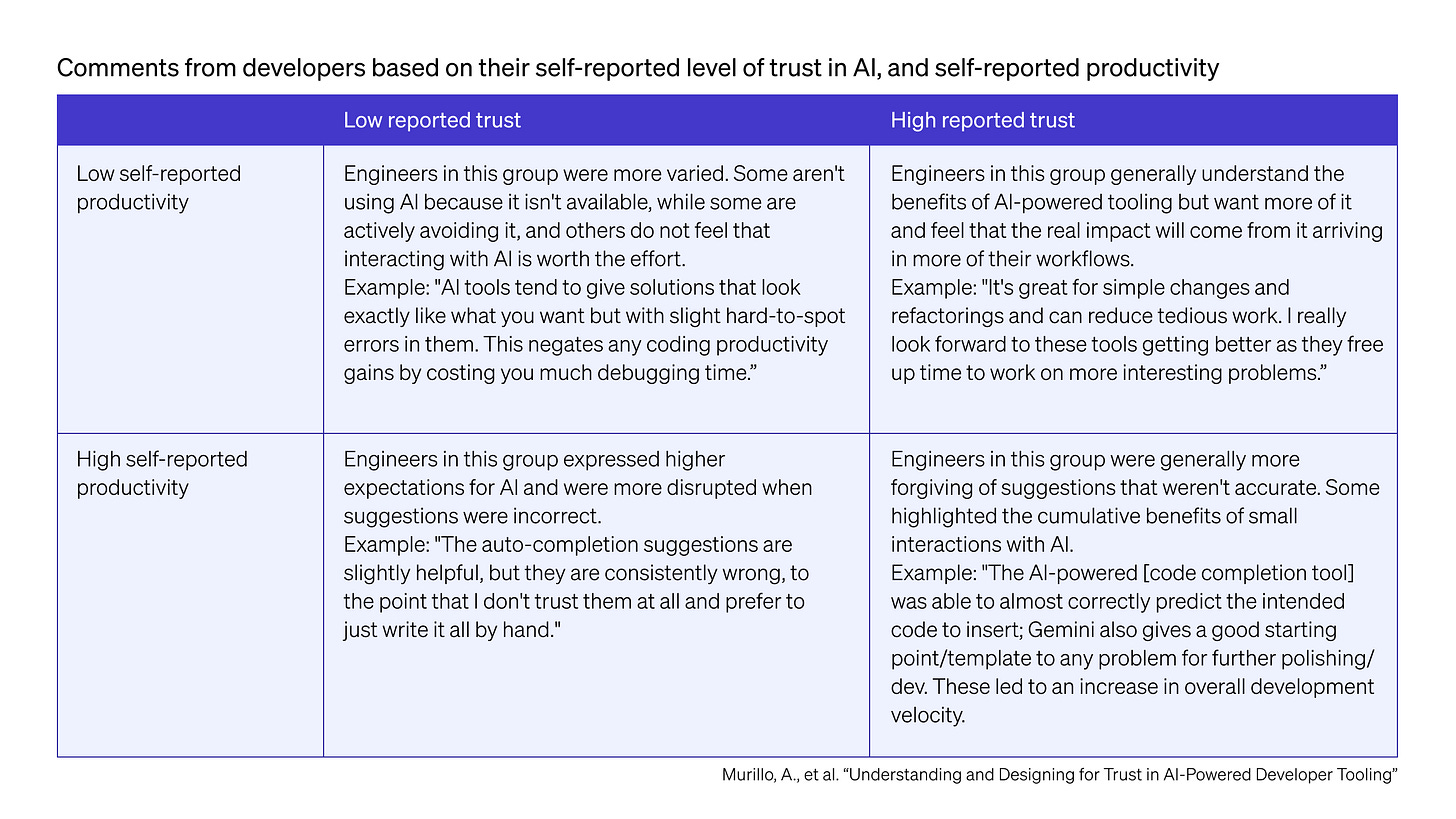

When analyzing survey data, the researchers segmented responses based on developers’ level of trust in AI tools, as well as their self-reported productivity.

They found that developers’ experiences with AI tools could be categorized into four distinct groups:

Developers with high trust and high productivity were generally more forgiving of inaccurate AI suggestions, valuing the cumulative benefits of even small interactions with AI tools.

Developers with high trust and low productivity wanted AI tools to have a more significant impact on their workflows, expecting greater benefits as the technology matures.

Developers with low trust and high productivity had higher expectations for AI, and were more disrupted when suggestions were incorrect.

Developers with low trust and low productivity had more varied responses: some weren’t using AI at all, while others felt that interacting with AI wasn’t worth the effort.

A key finding from this analysis is that developers with high trust aren’t blindly relying on suggestions from AI tools—rather, they’ve established an appropriate level of trust. To help other developers achieve an appropriate level of trust, the authors make the case for allowing them to have more control over when and how they receive AI suggestions.

For example, take the ‘low trust’ group who are more disrupted by incorrect suggestions: this group might need an option to see fewer suggestions, or to only see them in a particular context. Conversely, developers who have high levels of trust may want to see more suggestions, even if the suggestions are less likely to be completely correct.

Making AI tools more customizable

The researchers focus on two ways AI tools can better support developers: giving developers control over frequency and confidence levels for suggestions they receive. They argue that these two will have the biggest impact on the developer experience. For example, this could include allowing developers to adjust the confidence level of suggestions they receive—perhaps opting to only see high-confidence suggestions in specific contexts.

The authors also explored the challenge of designing AI settings that are both intuitive and transparent. Confidence levels, for example, can be difficult to communicate accurately without creating unrealistic expectations. Additionally, the impact of settings like frequency and confidence can vary based on the context, adding further complexity to the design process.

The paper goes on to describe several ideas that Google’s team has tested to give developers more control over AI settings. They offer key recommendations for designing these settings:

Provide diverse entry points to make it easier for developers to discover AI settings.

Be thoughtful with default settings since many developers won’t customize them.

Help developers understand the tradeoffs associated with AI settings, especially given the probabilistic nature of AI tools.

Final thoughts

This article offers a potential path forward for leaders not seeing the adoption they expect with AI tools. By offering better settings, and by ensuring these settings are intuitive, they can help developers build an appropriate level of trust and ultimately improve adoption.

Who’s hiring right now

Here’s a roundup of Developer Productivity job openings. Find more open roles here.

Adobe is hiring a Sr Engineering Manager - DevEx | San Jose

The Trade Desk is hiring a Director of Product Management - DevEx and Platform | San Jose

Multiverse is hiring a Senior DevOps Engineer | UK

SiriusXM is hiring a Staff Software Engineer - Platform Observability | US

That’s it for this week. Thanks for reading.

-Abi

As someone who advocates, generally, for AI-assistance in roles and outcomes, it is permanently refreshing to hear these pragmatic approaches backed up by levels of plausible research. Keep the good stuff coming, Abi!